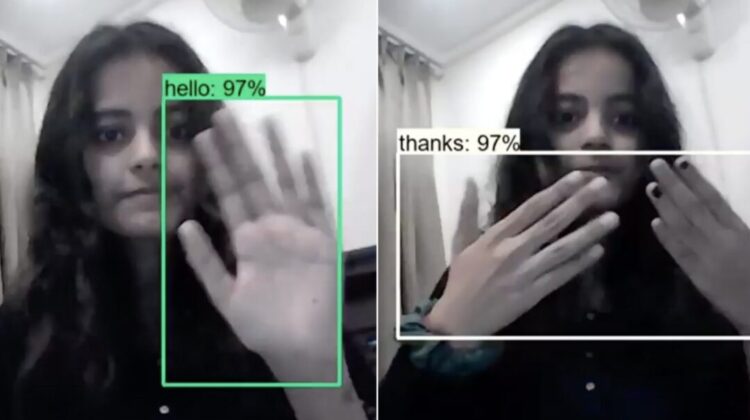

Priyanjali Gupta, a visionary engineering student at India’s Vellore Institute of Technology (VIT), has taken a monumental leap forward in bridging the communication gap. Her remarkable achievement? An artificial intelligence model that translates American Sign Language (ASL) into English in real-time.

Inspired by data scientist Nicholas Renotte’s work on sign language detection, Gupta embarked on her project. She harnessed the power of TensorFlow’s object detection API, employing a pre-trained model called ssd_mobilenet. This clever approach allowed her AI to decipher the intricate movements and positions of hands, forming the foundation for sign language interpretation.

Gupta acknowledges the inherent challenges in building a deep learning model specifically tailored for sign language recognition. However, her optimism shines through. She firmly believes that the open-source community, a vibrant hub of collaboration, will pave the way for even more sophisticated solutions. Gupta even envisions a future where deep learning models are built specifically for deciphering the rich tapestry of sign languages.

This innovation isn’t the first attempt to break down communication barriers. Back in 2016, students Thomas Pryor and Navid Azodi from the University of Washington developed “SignAloud,” a pair of gloves that translated sign language into speech or text. Their creation, lauded for its ingenuity, even won the prestigious Lemelson-MIT competition.

Gupta’s AI model represents a significant step forward. It has the potential to revolutionize communication for the deaf and hard-of-hearing communities. Imagine a world where real-time conversations flow effortlessly, fostering inclusivity and connection. Gupta’s creation is a testament to the power of human ingenuity and a glimpse into a future brimming with accessibility.

Leave a Reply