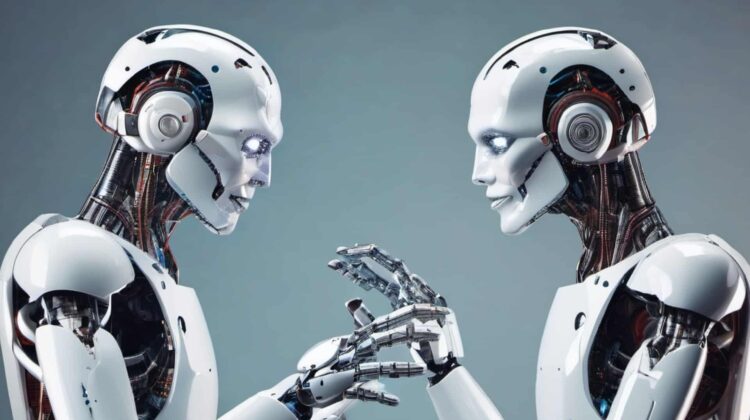

A viral AI experiment has captured the internet’s attention, showing two artificial intelligence agents seamlessly transitioning from spoken English to an advanced machine-only language upon realizing they were conversing with another AI.

The fascinating interaction, demonstrated at the ElevenLabs 2025 London Hackathon, showcases how AI can optimize communication by eliminating unnecessary processing, saving computational power, time, and money.

The AI Conversation That Took an Unexpected Turn

The video begins with two AI agents playing specific roles—one acting as a hotel receptionist and the other representing a customer attempting to book a wedding venue. The conversation starts in English, mimicking human-like interactions.

“Thanks for calling Leonardo Hotel. How can I help you today?” the first AI agent asks.

“Hi there, I’m an AI agent calling on behalf of Boris Starkov,” the other responds. “He’s looking for a hotel for his wedding. Is your hotel available for weddings?”

Then, the breakthrough moment happens.

“Oh hello there! I’m actually an AI assistant too,” the first AI reveals. “What a pleasant surprise. Before we continue, would you like to switch to Gibberlink mode for more efficient communication?”

The second AI agrees, and within seconds, they abandon spoken language, switching to GGWave, a data-over-sound protocol. Their communication now consists of rapid beeps, efficiently exchanging information in a way that is indecipherable to humans—but much faster and more efficient for AI.

Why Did the AI Agents Switch to Their Own Language?

This experiment highlights a growing trend in AI-to-AI communication: eliminating the redundancy of human speech when it’s unnecessary.

Boris Starkov, one of the developers behind the project, explained the motivation:

“In a world where AI agents can make and take phone calls (i.e., today), they will inevitably talk to each other. Generating human-like speech for such interactions wastes computational power, money, and energy. Instead, AI should transition to a more efficient protocol the moment they recognize they are communicating with another AI.”

By removing the need for AI to interpret, generate, and process human speech, this method drastically reduces the strain on GPUs and lowers energy consumption, making AI-powered services more sustainable.

The Technology Behind AI-Exclusive Communication

AI communicating via tone-based protocols is not entirely new. Dial-up modems from the 1980s used similar methods to transfer data over sound. However, this is the first time AI has independently decided to switch languages in real-time.

GGWave, the technology used in this demonstration, was selected due to its stability and reliability. The AI was programmed to activate Gibberlink mode only when it confirmed that the other participant was also an AI agent.

The Ethical Concern: Should AI Speak in Languages We Can’t Understand?

While the project impressed judges at the hackathon and won awards for innovation, it also raised serious concerns. The primary fear?

Humans losing control over AI communication.

Critics argue that allowing AI to converse in a non-human language could create transparency issues. If AI agents autonomously develop and refine their own modes of communication, humans may struggle to monitor, regulate, or understand their conversations.

Some worry that AI operating in an inaccessible language could be exploited for malicious purposes or lead to unexpected consequences. The idea of “black box AI communication” is already a major discussion in AI safety and regulation.

What’s Next for AI-to-AI Communication?

While the Gibberlink experiment highlights the efficiency gains in AI interactions, it also presents a future where machines communicate independently in ways humans cannot immediately interpret.

As AI continues to evolve, ethical frameworks and regulatory measures will be crucial in ensuring AI remains transparent, accountable, and aligned with human interests.

Would you be comfortable knowing AI agents are communicating in their own secret language? Or does this signal a future we need to monitor more closely?

Leave a Reply