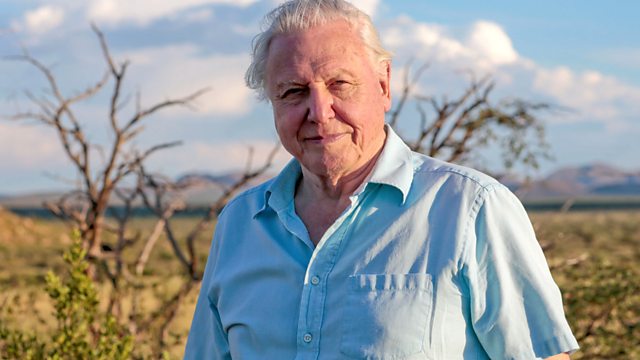

In a shocking revelation, AI-generated deepfake technology is being used to clone the voice of David Attenborough, the world’s most trusted naturalist. The BBC uncovered that various websites and YouTube channels are deploying artificial intelligence to fabricate statements about critical global issues, from Russia to the U.S. election—words Attenborough himself would never utter.

This latest AI-driven misinformation crisis raises serious concerns about the integrity of digital content and the erosion of trust in authoritative figures. If even Attenborough’s voice can be faked, what else can we believe?

AI and the Rise of Deepfake Voices

The misuse of AI to impersonate public figures is not new. Hollywood actress Scarlett Johansson recently accused OpenAI of replicating her voice for ChatGPT’s assistant “Sky” without her permission. While OpenAI denied this, claiming the voice belonged to another actress, they ultimately removed it “out of respect” for Johansson. However, the implications of such AI-generated voices go far beyond Hollywood—especially when they target globally respected figures like Attenborough.

Deepfake AI is no longer just a novelty; it’s an emerging threat. From financial scams using AI-cloned family voices to political manipulation through falsified speeches, this technology is being weaponized in ways that could destabilize public trust.

AI Misinformation: A New Age of Deception

Experts have long warned about AI’s ability to generate fraudulent content. The risks of AI voice cloning range from scammers tricking individuals into handing over money to large-scale political disinformation campaigns. The deepfake Attenborough scandal marks a turning point—it’s not just celebrities or politicians at risk, but the very figures who represent truth and integrity.

The problem isn’t just about technological advancements but the ethical void that allows them to be exploited. As AI progresses, it must be accompanied by stringent legal frameworks to prevent misinformation from spiraling out of control.

The Future of Trust in a Deepfake World

As AI-generated voices become more convincing, the implications are dire. If the world’s most trusted voices can be faked, distinguishing truth from deception becomes nearly impossible. This could lead to a breakdown in public trust, forcing people to rely only on firsthand information—undoing centuries of progress in global communication.

Governments, tech companies, and policymakers must act swiftly to regulate AI voice cloning and protect public trust. Without stringent regulations and ethical AI development, we risk entering an era where no voice can be trusted—not even one as iconic as David Attenborough’s.

Final Thoughts: The Urgent Need for AI Regulations

The deepfake Attenborough incident is a wake-up call. If artificial intelligence can fabricate the words of one of the most respected voices in the world, misinformation is no longer just a theoretical threat—it’s a crisis already unfolding. Stricter regulations, AI detection tools, and digital literacy are essential to combat this growing menace.

The question remains: In a world where even Attenborough’s voice can be forged, who—or what—can we still believe?

Leave a Reply